I love to run experiments – and to give tours of my lab. Visitors usually get excited by our underwater swarm robots and start fantasizing. “When will you throw your robots into the ocean to swim along with real fish and explore coral reefs?”, a woman asked. I paused, aware that my answer would disappoint her at first. Then I said: “I wish I could plan for that great moment, but I can't. The gap between the intelligence of a fish and the one of my underwater robots is still too large.” Knowing the differences between intelligence and artificial intelligence might have helped her to formulate realistic expectations for each of them. As research in artificial intelligence progresses, it will be increasingly important to all of us to understand the advantages and disadvantages of purely human decision-making and performance compared to computer algorithms.

Translating from intelligence to artificial intelligence

The artificial intelligence of my underwater swarm robots may suffice for the artificial world inside my laboratory. Moving my robots to the real world, the Atlantic Ocean for example, would expose them to the challenges of a dynamic environment. Their intelligence would fail spectacularly when confronted with tasks and conditions that change all the time and collapse under a messy flood of data. Unlike a living intelligence such as a fish, my artificially intelligent robots do not yet have the ability to set and achieve goals in unknown environments.

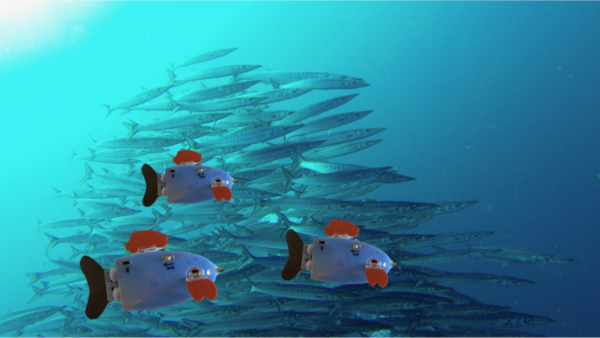

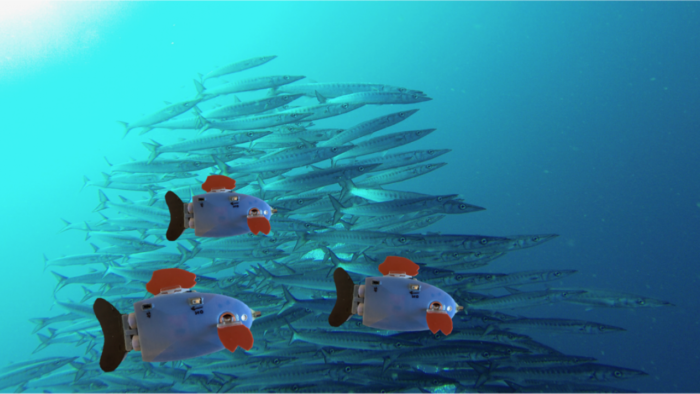

One day however, I hope to mimic animal behavior and replicate the dynamics of swarms. What fascinates me is how collective behaviors emerge from large numbers of individually limited entities that cooperate with each other. To experience collective behaviors at first hand, I undertake field trips to coral reefs, where I observe schools of fish and take inspiration from them. When looking at fish, I am asking myself questions such as: what does the individual need to know about its companions in order to coordinate its behavior? What does a fish know about its school, and what decisions does it make based on that information?

Back in the lab, I am, to some extent, translating the intelligence of living fish into the artificial intelligence, which enables my robots. For now, I have just three robots, which I test and analyze in a big tank of water. My work includes the design, manufacture, and programming of robots. The long-term goal is to create a swarm of bio-inspired underwater robots, capable of demonstrating behaviors observed during field trips such as milling about the same spot, or aggregation and dispersion in three-dimensional space. To this end, I study collective intelligence and the coordination of multi-agent systems. I hope that my visitors’ dreams come true and that through their collective behavior, my robots will contribute to faster search missions, safer harbors and oil platforms, and environmental monitoring in constrained areas such as coral reefs.

Artificial aids for my robots

Admittedly, my robotic swarming story is – unlike nature’s equivalent – not achieved with graceful ease. To some extent, my robots are similar to living creatures. They use camera sensors to observe their environment the way fish use eyes. Instead of a brain, they have a tiny computer to process their observations and make decisions based on them. Motors, in place of muscles, allow them to move through and physically interact with their environment. Even their bodies resemble those of fish since fish are highly efficient swimmers.

And yet, in the way they perceive their world, my robots are very different from fish. They have a hard time distinguishing between meaningful information and garbage. Their sensors are noisy and do not always represent the true environment. Their actuators struggle to accurately execute the commands coming from the robot’s computer. Luckily, I can control their environment for them. To avoid overwhelming my robots, I deliberately limit the amount of sensory input they can consider for decision-making and keep their environmental conditions as steady as possible. Still, I have to prepare them for all eventualities. I must anticipate each scenario a robot could experience, and write instructions for handling every situation specifically. I explicitly account for all probable sources of failure in my robot design. As a consequence, the robots work well only as long as the environment is consistent with what they were designed for.

For instance, one sensor on my robot is a photodiode, which measures light intensity. The onboard computer sees a continuous signal that peaks whenever it faces a light source. This way, the robot can turn in place until it detects a light source and then swim toward it. Light detection works well as long as the light source has a certain constant intensity; it becomes much harder as soon as the light intensity emitted by the source changes dynamically. Now the robot has to distinguish whether a perceived change in intensity is due to its own movement or due to a change in signal strength from the source. A robot might turn endlessly or swim forward not even facing the light source.

Powers of collectives

Single robots are unreliable for the aforementioned reasons. I want to know if we can leverage their abilities and mitigate their weaknesses by organizing them into a collective. What might be possible if my robots acted in concert through coordination?

Now building a swarm of robots is not as simple as building one robot many times. Each robot has to be inexpensive enough so that I can afford many of them. And each one has to be small in order to test all of them together in a reasonably sized test bed. If they were too large for my lab, I could neither control the environment nor install an observation and tracking system to analyze the swarm. Small size and low cost put a limit to the complexity allowed in my robot design and force me to look for parsimonious approaches for collective behaviors. At the size of your palm, space for computational power inside my robots – which makes autonomous artificial intelligence possible – is at a premium.

Additionally, every robot has to be identical to all the others. I do not want a robot in my swarm to be different, to be more or less capable. No robot should take the lead and guide the others. Rather, they should all communicate locally to each other and their local decisions should propagate through the collective. This achieves two important properties: they are robust to the loss of individuals, and the size of the collective is scalable, i.e., the total number of robots in the swarm does not influence the complexity of decision-making.

An example from nature shows that certain schools of fish feel safer in dark and shady spots. “There is evidence that each individual fish adjusts its swimming speed with regard to the momentary satisfaction with the environment, i.e., swimming faster in bright areas and slower in dark areas”, a leading researcher in collective animal behavior explained during a talk I attended recently. “Collectively, this individual behavior is enough to keep the school navigating from dark spot to dark spot.” The behavior is self-organized and no fish leads the school. In fact, dissatisfied companions overtaking others from behind at higher speeds frequently replace fish at the head of a school.

Need for physical experiments

I want my robots to start with simpler behaviors. Which rule, for instance, would aggregate dispersed robots of a collective in a non-fixed single spot of the test bed? Could each robot just repeatedly swim toward the center of all other robots it observes? In my daily work, I test numerous assumptions like this one and integrate sets of rules that work with high probabilities into reliable algorithms.

Answering such behavioral questions in the lab is already a significant improvement to computer simulations in idealized virtual worlds. In a simulation, not only the robots are engineered but their environments as well. A simulation cannot capture all the coupled physics present in an artificial test environment. Simplifications neglect the complexity of a real physical system. Theoretical assumptions include continuous and instantaneous messaging, no message loss or corruption, or perfect and symmetrical distance measurements. Real-world implementations can almost fulfill these assumptions but errors are still possible. Mistakes are not observed at small scales, but as numbers increase, the probability of even a rare error becomes certain. This is not a problem if errors are locally restrained but a big one if errors propagate through a collective. This is why we need physical systems to bridge this reality-gap and systematically improve the robustness of algorithms for collective behaviors.

Bridging the gap between intelligence and artificial intelligence

Watching animal swarms allows us to learn how collective behaviors emerge. Every attempt to replicate collective behaviors with artificial intelligences requires understanding local decision-making. Observations in the local environment build the basis for decisions. We need to learn how to make more complete observations, how to filter meaningful data more reliably, and how to reach more intelligent decisions on robotic systems.

It is one thing to search through a well-constrained database on a bulky supercomputer. It is quite another thing to navigate a messy world with an embodied intelligence inside a tiny autonomous robot. Being intelligent enough to learn in an ambiguous world is much harder than getting trained from a preselected data set.

I am excited about the day I will release my robots into the ocean and see how they cope. But this will only happen when I have run enough successful simulations and test runs in the lab. To this day, artificial intelligence still requires artificial environments to develop. This is how I experience the gap between intelligence and artificial intelligence.

Watch an underwater swarm robot in action: https://www.youtube.com/watch?v=MOhHQ_GRQ48

Die Beiträge auf dem Reatch-Blog geben die persönliche Meinung der Autor*innen wieder und entsprechen nicht zwingend derjenigen von Reatch oder seiner Mitglieder.

Comments (0)